AI scam monitoring is becoming a must-have for fintechs. Learn what to detect, how to intervene, and how Australian teams can ship safer payments fast.

AI Scam Monitoring: What Fintechs Must Build Now

Scams are winning because they’re fast. Faster than call centres. Faster than manual fraud rules. Faster than the moment a customer realises the “ATO refund” text isn’t real.

That’s why Starling’s move to launch an AI tool for scam monitoring (as reported in the RSS headline) matters—especially for fintechs and banks watching fraud losses rise while regulators and customers demand better outcomes. The headline is UK-focused, but the lesson is universal: real-time, AI-driven scam detection is becoming table stakes.

For Australian fintechs, this is also an opportunity. If you’re building payments, wallets, card issuing, or account-to-account rails, scam monitoring isn’t a bolt-on “fraud feature”. It’s a product capability that protects customers, reduces loss, and improves trust—three things that convert prospects into long-term users.

Why AI scam monitoring is different from “fraud detection”

AI scam monitoring isn’t just fraud detection with a new label. It targets a different problem: authorised push payment (APP) scams and social engineering, where the customer initiates the payment themselves.

Traditional fraud engines are great at spotting stolen credentials, impossible travel, or card-not-present anomalies. Scam payments look “legit” in the telemetry because the user is logged in, device is known, and transaction is consistent with the account’s permissions.

Fraud vs scam: the operational difference

Here’s the cleanest way to explain it internally:

- Fraud: attacker takes over the account or card and initiates payment.

- Scam: attacker controls the customer’s decision-making and the customer initiates payment.

That distinction matters because the intervention has to change too. Blocking everything that’s “unusual” creates false positives and angry customers. Scam monitoring needs context, conversational patterns, payee intelligence, and behavioural signals that point to coercion or deception.

What AI adds (when it’s done properly)

A good AI scam monitoring system does three things rule-based systems struggle with:

- Connects weak signals (e.g., payee history + time pressure behaviour + device actions).

- Adapts quickly when scam scripts change.

- Prioritises intervention—not every risky payment should be blocked; some need friction, some need education, some need a human callback.

If you’re a fintech leader, the goal isn’t “use AI.” The goal is reduce scam losses without torching conversion and customer satisfaction.

What an AI scam monitoring tool typically looks for

An AI system can’t read minds, but it can spot patterns that consistently show up in scam journeys. The strongest systems combine transaction monitoring with behavioural analytics and payee intelligence.

1) Transaction and payment graph signals

Start with the basics: who’s paying whom, how often, and in what amounts.

Common scam markers include:

- First-time payments to a new payee with high value

- Rapid repeat payments (customer “tests” with $1,000 then sends $9,000)

- Out-of-pattern transfers that don’t match historical behaviour

- Funds routed through mule-like accounts (many inbound payments, quick outbound)

This is where graph-based features help: the model learns relationships across accounts and counterparties, not just single-transaction thresholds.

2) Behavioural cues during the payment journey

Scams often create urgency. That urgency leaves fingerprints:

- Short time between adding a payee and sending a large payment

- Unusual navigation patterns (jumping straight to transfer screens)

- Multiple failed attempts (wrong BSB/account, then corrected quickly)

- Session patterns consistent with being coached (long pauses, copy/paste, repeated edits)

I’ve found that behavioural signals are where teams get the biggest lift, because they’re harder for scammers to “tune” than transaction values.

3) Payee risk intelligence

Payee intelligence is a force multiplier. It includes:

- Known mule or suspicious accounts (internal + consortium intelligence)

- Merchant category and descriptor anomalies (for card rails)

- Recent inbound scam reports linked to the same payee details

The tricky bit is governance: you need strong evidence thresholds to label payees risky, and a process to correct mistakes.

4) Natural language and customer support signals (where permitted)

Some organisations go further by analysing:

- Scam-related keywords from chat/call transcripts

- Customer-reported scam types and scripts

- Message metadata (not content) where privacy rules require minimisation

This is highly sensitive and regulated, but when done with privacy-by-design, it can materially improve detection and triage.

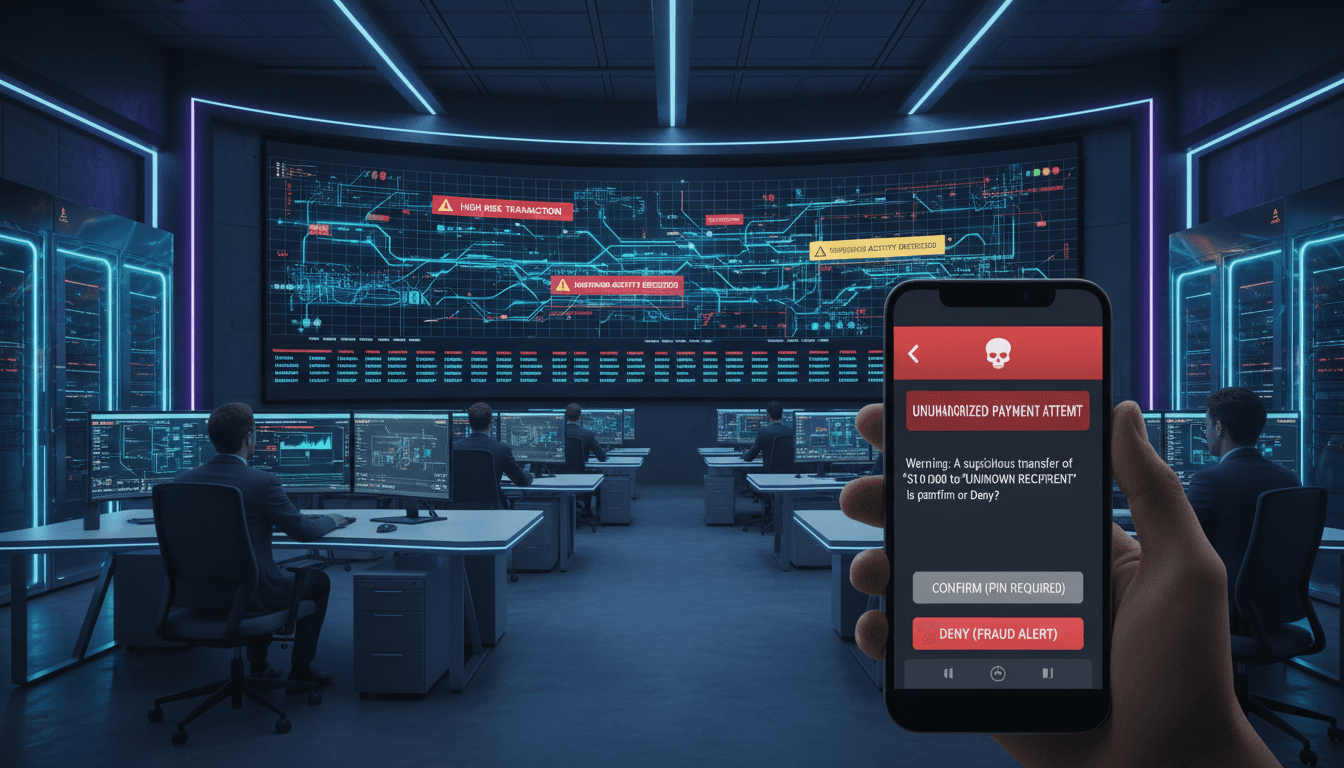

The interventions that actually reduce losses (not just “alerts”)

Monitoring without action is theatre. The best scam prevention systems pair scoring with targeted intervention.

Friction isn’t the enemy—bad friction is

If your only tool is “block payment,” you’ll either:

- Block too much and lose customers, or

- Block too little and keep eating losses

A modern scam playbook uses graduated responses:

- Just-in-time education

- A warning that’s specific to the pattern detected (e.g., “This looks like an invoice redirection scam. Have you verified the bank details using a known phone number?”)

- Confirmation with a purposeful pause

- A short delay for high-risk first-time payments. This is unpopular until you measure results: scam victims often later say they “wish there was a moment to think.”

- Dynamic step-up authentication

- Not just OTPs. Think device re-binding, voice callback, or in-app secure confirmation.

- Real-time escalation to a specialist

- A fast call from a trained team can stop a scam mid-flight.

- Post-transaction containment

- Rapid recall/hold workflows, beneficiary outreach, and mule account containment

Snippet-worthy stance: Scam prevention isn’t about blocking payments. It’s about interrupting manipulation.

What Australian fintechs should take from Starling’s move

The Starling headline is a signal: AI scam monitoring is shifting from “innovative” to “expected.” In Australia, that shift is already underway.

The local pressure is structural

Australian payments are fast and getting faster. Faster rails are great for customer experience—but they compress reaction time.

From a product standpoint, that means:

- You need real-time scoring (milliseconds to seconds)

- You need clear liability and dispute handling

- You need audit-ready decisioning (models + rules + human overrides)

“Innovation” that customers feel

Customers don’t care if you used gradient boosting, deep learning, or rules. They care that:

- They didn’t lose money

- The bank helped quickly

- The experience didn’t treat them like a criminal

If you’re building an Australian fintech brand, scam monitoring is one of the few security investments that directly improves word-of-mouth. People talk about getting scammed. They talk even more about not getting scammed because their bank stepped in.

Building an AI scam monitoring program: a practical blueprint

If you want leads (and real outcomes), here’s the build plan I’d recommend to most fintech teams.

1) Start with a narrow, high-loss scam type

Pick one you can measure end-to-end, such as:

- Invoice redirection / business email compromise

- Impersonation scams (bank, telco, government)

- Marketplace payment scams

Define success as a mix of:

- Loss reduction

- False positive rate

- Customer drop-off

- Time-to-intervention

2) Combine models with rules, don’t fetishise “pure AI”

Hybrid decisioning is the reality in finance AI.

- Rules handle compliance and deterministic controls (caps, known bad payees)

- Models handle ambiguity and pattern recognition

- Human analysts handle edge cases and continuous learning

This is also easier to defend to boards and regulators.

3) Instrument the customer journey properly

Most fintechs under-invest here. You can’t detect scam behaviour you don’t log.

High-value signals include:

- Payee add/edit events

- Copy/paste into payee fields

- Session duration and step timing

- Device and network change events

Do this with privacy principles baked in: log what you need, minimise what you don’t.

4) Treat explainability as a product requirement

If you can’t explain why you warned or blocked, you’ll lose customers and waste support time.

Good systems produce:

- Top contributing factors (e.g., “first-time payee + urgent transfer pattern”)

- A customer-friendly explanation

- An internal analyst view with deeper evidence

5) Close the loop with feedback and learning

Scam monitoring improves when you feed it outcomes:

- Confirmed scam reports

- Chargebacks and disputes

- Beneficiary bank responses

- Analyst decisions

This turns your system from reactive to improving.

Common questions teams ask (and the blunt answers)

“Will AI scam monitoring increase false positives?”

It can—if you treat it like a blunt fraud engine. Done right, it reduces false positives because it targets contextual risk rather than simple thresholds.

“Do we have enough data to train a model?”

Most fintechs have enough to start with a hybrid approach. Begin with heuristics + lightweight models, then iterate. Waiting for “perfect data” is how scam losses compound.

“What’s the fastest win we can ship?”

A real-time risk score that triggers smart warnings for first-time high-value payments is usually the quickest measurable improvement. Pair it with a rapid escalation path.

Where this fits in the AI in Finance and FinTech series

Across this series, one theme keeps showing up: AI works best when it’s paired with strong decisioning, good data, and clear customer outcomes. Scam monitoring is the clearest example because the ROI isn’t abstract—losses avoided are visible, and trust gained is durable.

If Starling’s AI scam monitoring launch nudges the market, Australian fintechs should respond with intent, not panic. Build a system that’s real-time, explainable, and designed around customer behaviour—not just transactions.

The next 12 months will separate teams that add fraud features from teams that engineer safety into the product. Which side are you on?