Crisis-ready finance needs AI that spots deepfakes fast and reduces downtime impact. Practical controls, playbooks, and culture shifts for banks and fintechs.

Crisis-Ready Finance: AI vs Deepfakes & Downtime

Deepfakes and outages used to be “edge cases” in financial services. Now they’re board-level risks—because they hit the two things finance sells: trust and availability. One convincing synthetic voice can trigger an unauthorized transfer. One hour of downtime can cascade into missed payroll runs, failed settlements, and a customer support pile-on that lasts for days.

The uncomfortable truth: most organisations still treat these incidents as either cyber problems or IT problems. They’re neither. They’re business continuity problems, and solving them takes more than a runbook. It takes a crisis-ready culture backed by AI systems that can detect, decide, and respond faster than humans can coordinate in a war room.

This post is part of our AI in Finance and FinTech series, and it’s focused on the operational reality many banks and fintechs are living through: deepfake-enabled fraud, platform downtime, and rising customer expectations—and how AI can help turn resilience into a competitive advantage.

Deepfakes are a finance problem, not a “future threat”

Deepfakes are already changing fraud economics: they reduce the attacker’s cost of impersonation and increase success rates for social engineering. In practice, that means a wider pool of criminals can attempt higher-value scams, more often.

Where deepfakes hit first: voice, video, and “know your customer”

Voice cloning is the fastest path to real damage. Many payment approvals and call-centre verification flows still rely on informal human judgement: “That sounds like them.” Criminals know it.

Common pressure points include:

- Call-centre account takeover using a cloned voice paired with leaked personal data

- CEO/CFO impersonation to push urgent payments (classic business email compromise, upgraded)

- Synthetic video in remote onboarding or remediation interviews

- Manipulated evidence in disputes (edited videos, altered screenshots, fake audio)

Here’s the stance I’ll take: if your controls assume humans can reliably spot fakes, you’re behind. Humans are the last line of defence, not the first.

AI countermeasures that actually work in production

AI for fraud detection isn’t one model that “spots deepfakes.” It’s a stack of signals that makes impersonation expensive again.

Practical layers to deploy:

- Liveness and challenge-response for high-risk interactions

- Randomised prompts (read a phrase, turn head, blink patterns) beat static selfie/video checks.

- Multimodal risk scoring

- Combine device intelligence, behavioural biometrics, network signals, transaction context, and identity graph features.

- Voice anti-spoofing models

- Detect artefacts like spectral inconsistencies, compression patterns, and synthesis “fingerprints.”

- Step-up authentication by risk, not by channel

- If the request is unusual (new payee + high amount + urgency language), require additional proof—even if the caller “sounds right.”

Snippet-worthy rule: Treat deepfakes like malware—assume they’ll get past one control, then design for layered containment.

Downtime is the new reputational risk (and customers won’t wait)

Outages aren’t rare anymore; they’re visible. Status pages, social media, and real-time payments have made downtime a public event. For banks and fintechs, “we’re investigating” isn’t enough when customers can’t tap-to-pay or merchants can’t reconcile.

Why outages are harder now

Modern finance stacks are more capable—but also more interdependent:

- Microservices and APIs increase failure paths.

- Third-party dependencies (KYC, card processors, cloud regions) mean “your outage” might start elsewhere.

- Real-time payments compress recovery windows.

- Regulatory expectations around operational resilience have tightened in multiple markets.

A crisis-ready culture recognises that reliability is not just an SRE KPI. It’s a product promise.

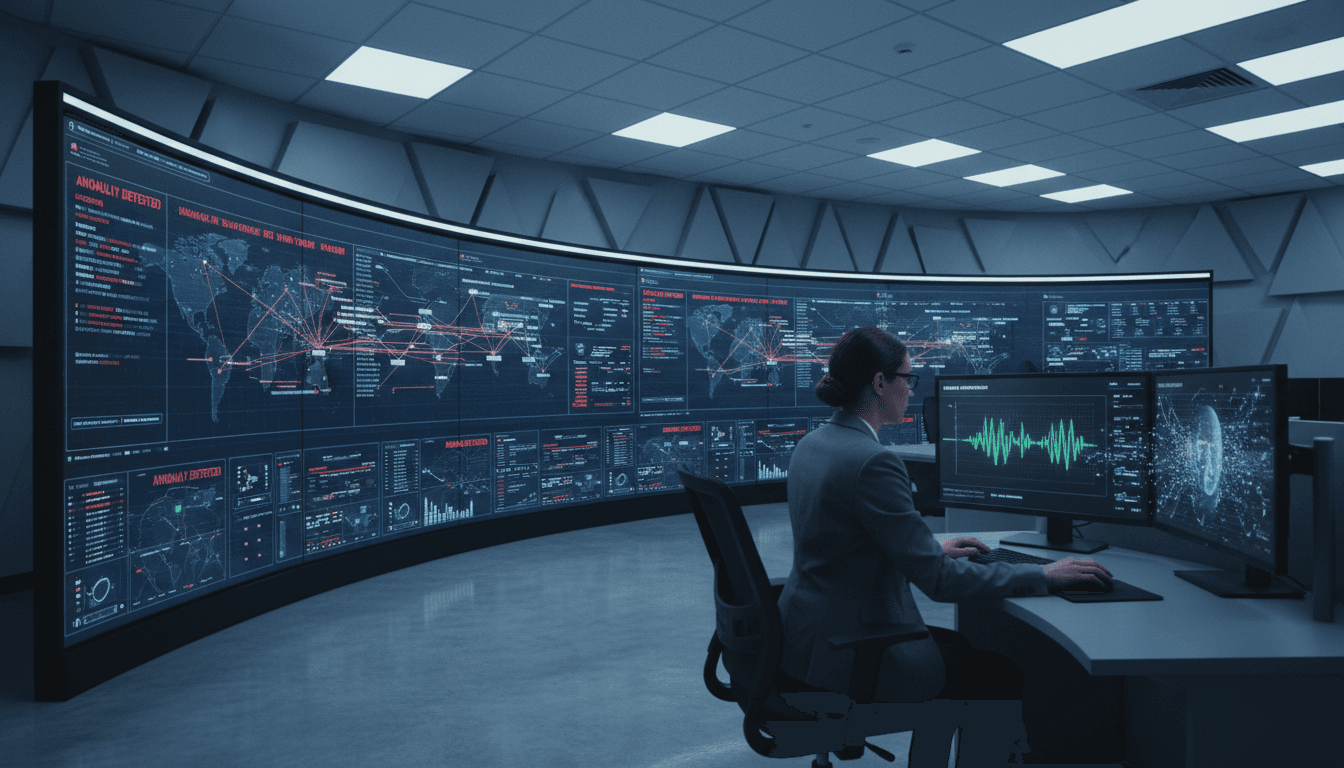

Where AI improves operational resilience

AI isn’t a magic fix for uptime. But it can shorten detection and diagnosis dramatically, which is where most incident time gets burned.

High-impact AI use cases for operational resilience in banking and fintech:

- Anomaly detection on service telemetry (latency, error rates, queue depth) to surface early warning signals

- Log clustering and incident summarisation so responders don’t drown in noise

- Root-cause suggestions based on historical incidents (“this pattern matches the last cache invalidation failure”)

- Change risk scoring for releases (flagging deployments likely to trigger incidents)

- Automated runbook execution for safe, reversible actions (restart, scale, failover) with approvals for higher-risk steps

The best teams I’ve worked with treat AI as a co-pilot for responders, not an autopilot for production.

“Crisis-ready culture” is a system: people, process, and AI

Culture sounds fluffy until you’re 37 minutes into an incident and nobody knows who can approve a customer-impacting rollback.

A crisis-ready culture is simply this: clear ownership, rehearsed decisions, and fast truth-finding. AI supports all three—if you build it into the operating model.

The crisis-ready operating model (what to build)

If you want a concrete blueprint, start here:

- Define “crown jewels” and failure budgets

- Which customer journeys must never fail (payments, login, card authorisation)?

- What’s the maximum tolerable downtime per journey?

- Set decision rights before the incident

- Who can freeze outbound payments? Who can trigger failover? Who can communicate externally?

- Run simulations that include deepfakes and outages

- Tabletop exercises are good; live-fire is better.

- Instrument everything that matters

- You can’t respond to what you can’t see. Observability is a prerequisite to AI.

- Embed learning loops

- Every incident produces model improvements, new rules, and better playbooks.

The role of AI in decision-making (and the guardrails you need)

AI can accelerate response, but it can also amplify mistakes if you let it act without constraints.

Good guardrails:

- Human-in-the-loop for high-impact actions (payment holds, customer comms, failover)

- Audit trails for every AI recommendation and action

- Policy-as-code constraints (“never auto-disable fraud controls during peak hours”)

- Red-team testing against fraud models and incident automation

- Model monitoring for drift, false positives, and performance regressions

One-liner: Speed wins incidents; governance prevents self-inflicted wounds.

AI fraud detection that’s ready for deepfakes: a practical playbook

Banks and fintechs often ask, “Where do we start?” Start where the money moves and where humans are easiest to trick.

Step 1: Map your highest-risk money movement paths

Prioritise flows that combine high value + low friction + remote verification, such as:

- Adding a new payee

- Raising transfer limits

- Changing contact details (email/phone) followed by a payment

- Merchant bank detail changes

- Customer support-driven overrides

Step 2: Use behaviour, not biography

Static personal data (DOB, address) is broadly compromised. Behaviour is harder to steal.

Strong behavioural signals include:

- Typing cadence and navigation patterns

- Device and browser fingerprints

- Session-level anomalies (copy/paste spikes, unusual retries)

- Payee graph distance (brand-new beneficiary vs long-used)

Step 3: Make step-up authentication feel targeted

Customers tolerate friction when it’s clearly tied to risk. They hate random challenges.

A good risk engine triggers step-up when multiple signals align:

- New device + unusual location + new payee + urgent transfer language

That’s where AI-driven risk scoring earns its keep.

Step 4: Prepare for the “false positive tax”

Every fraud model creates operational load. Plan for it.

- Build case management that prioritises by loss expectancy

- Add explainability that a fraud analyst can act on (“new device + first-time payee + mismatch in voice liveness”)

- Measure time-to-decision and analyst throughput as core metrics

The holiday effect: why December incidents feel worse

Late December is a stress test for finance teams. Volumes spike, staffing thins out, and customers are less patient because they’re travelling, shopping, and relying on instant payments.

That’s why crisis readiness matters right now, not “next quarter.” If your incident response depends on a few key people being online, you don’t have resilience—you have hope.

A realistic December-ready checklist:

- Freeze risky changes during peak days unless they’re urgent

- Pre-stage communications templates for outages and fraud waves

- Tighten monitoring on payment rails, onboarding, and authentication

- Run one deepfake tabletop exercise focused on call-centre takeover

What to do next (if you want resilience that holds up)

Crisis-ready culture isn’t a poster on a wall. It’s an operating system—and AI is becoming a core component of that OS for fraud detection, operational resilience, and real-time monitoring and response.

If you’re leading risk, fraud, engineering, or operations in a bank or fintech, I’d start with two moves that pay back quickly:

- Stand up a unified risk view that correlates identity signals, transaction behaviour, and platform health.

- Rehearse your worst day—deepfake impersonation plus partial outage—then redesign controls and runbooks based on what breaks.

The broader theme in our AI in Finance and FinTech series is simple: AI is most valuable when it’s attached to a clear business outcome. Right now, the outcome that matters is staying trustworthy when someone is actively trying to make you fail.

If deepfakes and downtime happen in the same week, will your organisation respond like a team that’s practiced—or a team that’s improvising?