Nvidia’s SchedMD buy highlights the real AI bottleneck: reliable open-source infrastructure. Here’s what it means for scalable AgriTech AI.

Nvidia’s Open-Source AI Bet: What It Means for AgriTech

A lot of AI projects don’t fail because the model is bad. They fail because the infrastructure can’t keep up—jobs collide, GPUs sit idle, costs spike, and the team can’t reliably reproduce results.

That’s why Nvidia’s acquisition of SchedMD, the company behind the open-source workload manager Slurm, matters well beyond data centres and Big Tech headlines. Slurm is the “traffic controller” used to schedule and run large compute jobs across clusters. Nvidia is signalling that open-source AI isn’t just about models—it’s about the boring, critical plumbing that makes AI practical at scale.

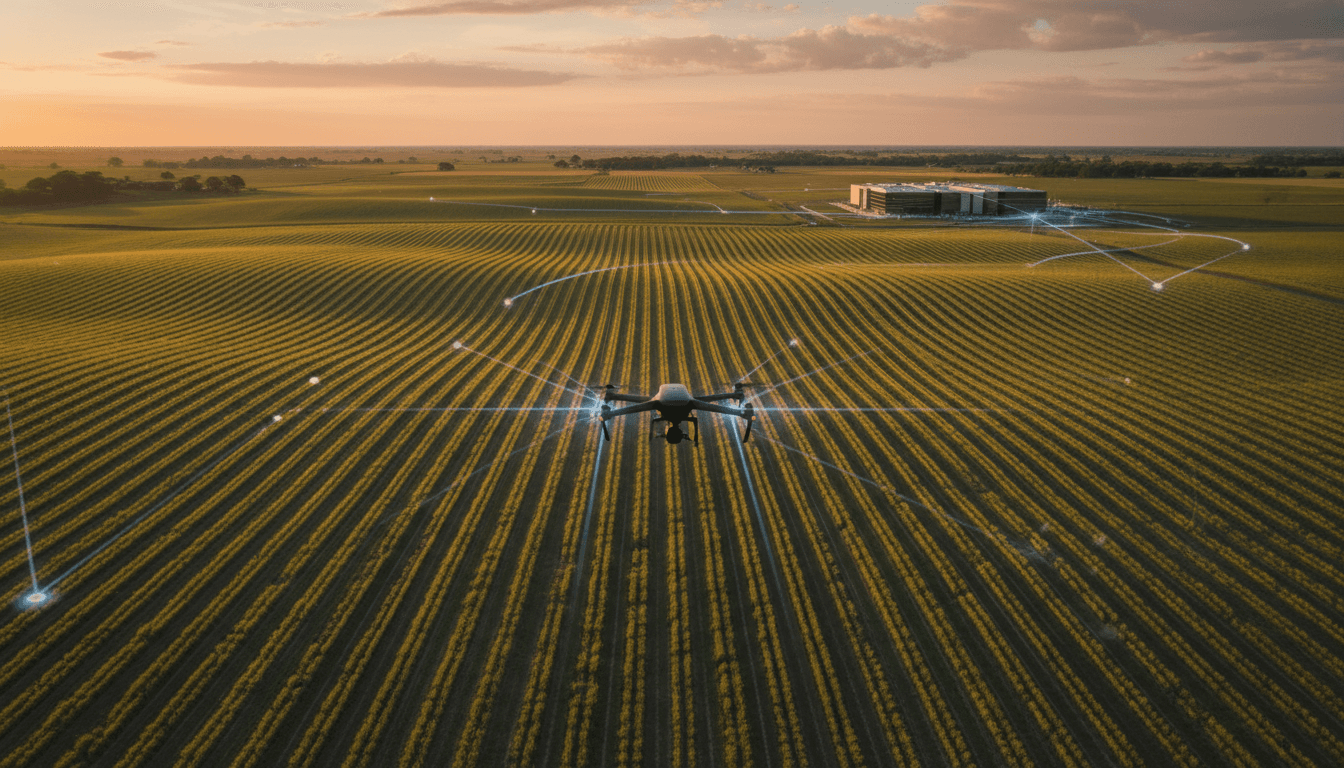

This is especially relevant for Australian AI in Agriculture and AgriTech teams. Whether you’re training yield prediction models, running computer vision for crop monitoring, or building decision-support tools for irrigation and fertiliser optimisation, you’re often bottlenecked by compute scheduling, governance, and cost control. The reality? If you can’t run AI reliably, you can’t run it profitably.

Nvidia didn’t buy a model company—Nvidia bought reliability

Nvidia’s move is straightforward: it wants to strengthen the software layer that keeps its hardware indispensable. CUDA already anchors developers to Nvidia GPUs. Now Slurm strengthens the operational layer that manages massive training and inference workloads.

SchedMD’s Slurm is widely used in high-performance computing (HPC) and AI environments to:

- Queue and prioritise jobs (training runs, batch inference, simulation)

- Allocate resources (GPUs/CPUs/memory) efficiently

- Enforce policies (who gets what compute, when)

- Provide operational visibility (what ran, where, and why it failed)

For agri-enterprises, this matters because AI workloads are seasonal and spiky:

- Pre-planting: soil and moisture modelling, scenario analysis

- In-season: frequent satellite/drone imagery processing for crop monitoring

- Pre-harvest: yield prediction refreshes and logistics planning

- Post-harvest: benchmarking and model retraining with updated outcomes

Slurm-style scheduling is how you stop those spikes from turning into chaos.

Why “open source” is the point, not a footnote

Nvidia has said it will continue distributing SchedMD’s software on an open-source basis. That’s a big deal.

Open-source infrastructure is attractive in agriculture because it reduces the two biggest blockers I see in the field:

- Vendor lock-in fear (especially for co-ops, growers’ groups, and mid-market agri-businesses)

- Long procurement cycles (common in enterprise and government-adjacent supply chains)

Open source lowers the barrier to trial—and trials are how agri AI gets adopted.

The hidden bottleneck in precision agriculture AI: scheduling and throughput

Most teams talk about model accuracy. The commercial impact often comes from throughput: how many paddocks, images, farms, and scenarios you can process per day at a predictable cost.

Here’s what scheduling changes in practical terms.

Crop monitoring at scale: from “cool demo” to daily ops

Computer vision for crop monitoring usually starts with a pilot:

- A few drone flights

- Some labelled imagery

- One model run on a single workstation

Then you scale. Suddenly you’re processing:

- Multi-spectral images across many properties

- Time-series data across weeks

- Multiple model versions (because the agronomy team wants comparisons)

Without a proper scheduler, teams resort to spreadsheets and informal rules like “don’t start a run until Mark finishes his.” That doesn’t scale.

With cluster scheduling (Slurm is a common choice), you can:

- Prioritise urgent inference (e.g., pest outbreak detection) over long retraining jobs

- Run jobs “overnight” automatically when compute is cheaper or less contested

- Create guardrails like GPU quotas per team to prevent budget blowouts

This is how you make precision agriculture AI dependable enough for operational decisions.

Yield prediction and simulation: reproducibility is money

Yield prediction looks simple on paper: weather, soil, management practices, and satellite data in—yield out.

In practice, teams run:

- Hyperparameter searches

- Model ensembles

- Scenario simulations (rainfall ranges, planting window shifts, fertiliser strategies)

The value isn’t just a better R-squared. It’s the ability to:

- Re-run the same experiment later

- Explain why a forecast changed

- Audit what data and code produced which output

Schedulers help create that audit trail by tracking job metadata, resource usage, and execution history.

If you can’t reproduce your results, you can’t defend your recommendations to a grower—or to your CFO.

What this signals about the AI market: infrastructure is where the fight is

A lot of attention goes to model releases. But the competition is shifting to ecosystems: hardware, orchestration, developer tools, and open-source distribution.

Nvidia is responding to two pressures:

- Rising competition in AI hardware and platforms

- A surge in capable open-source models, including from Chinese AI labs

So Nvidia is strengthening the path from “model exists” to “model runs cheaply and reliably.” For AgriTech, that’s good news, because agriculture is a cost-sensitive industry. AI that costs too much per hectare won’t last.

Why this matters specifically in Australia

Australia’s agricultural footprint is massive and geographically dispersed. That creates two operational realities:

- Connectivity varies (remote regions, intermittent links)

- Data volumes can be huge (imagery, sensor streams, machinery telemetry)

That pushes many organisations toward hybrid AI infrastructure:

- Edge inference on-farm (for latency and connectivity)

- Centralised training in cloud or data centres (for scale)

- Periodic batch processing for crop monitoring and reporting

Scheduling tools become the bridge that keeps hybrid systems stable. They decide what runs where, when, and at what cost.

Practical playbook: how AgriTech teams can use open-source AI infrastructure

If you’re building or scaling AI in agriculture, you don’t need to copy Nvidia. But you should copy the principle: treat AI operations as a product, not an experiment.

1) Start with a compute “bill of materials” for one use case

Pick a single workflow—say, weekly crop stress detection—and document:

- Inputs (imagery type, resolution, volume)

- Processing steps (preprocessing, inference, postprocessing)

- Frequency (weekly, daily during peak)

- SLA (how fast the output must be ready to be useful)

This turns “we need GPUs” into “we need 6 GPU-hours per farm per week during January–March.” That’s finance-friendly.

2) Add scheduling before you add more GPUs

Most teams buy capacity before fixing utilisation. That’s backwards.

A scheduler helps you:

- Increase GPU utilisation (less idle time)

- Reduce job collisions and manual coordination

- Enforce fair access across projects (research vs production)

Even if you’re not running Slurm yet, the concept matters: automate orchestration early.

3) Build governance into the workflow, not into meetings

Agriculture AI often touches regulated or commercially sensitive data (farm performance, supply chain volumes, pricing signals). Governance can’t be an afterthought.

Operational controls that work in practice:

- Role-based access to datasets and compute partitions

- Mandatory experiment tracking (model version, data version)

- Cost allocation tags per customer, region, or crop type

Schedulers and MLOps tooling make this enforceable.

4) Plan for seasonal bursts like a retailer plans for Christmas

It’s December 2025. Many agri organisations are already planning next season’s analytics and AI workloads.

Treat peak agronomy periods like peak commerce events:

- Pre-book capacity (cloud reservations or on-prem scheduling partitions)

- Define priority rules (biosecurity alerts outrank R&D retraining)

- Create a “fast lane” for inference jobs that drive daily decisions

If you do this well, you don’t just reduce cost—you reduce stress across the whole operation.

People also ask: does open-source AI actually help farms?

Does open-source AI reduce costs in AgriTech?

Yes, when it reduces operational friction: faster trials, fewer licensing constraints, and better portability across cloud/on-prem. The savings show up in time-to-deploy and higher utilisation, not just in “free software.”

Is Slurm only for supercomputers?

No. Slurm started in HPC, but it’s widely used for AI training and batch inference. If you’re running multi-GPU workloads or multiple teams on shared compute, a scheduler becomes useful quickly.

What should a small AgriTech startup do first?

Get disciplined about repeatability and cost tracking before scaling infrastructure:

- Track experiments and data versions

- Measure GPU-hours per workflow

- Automate job runs and monitoring

You can adopt heavier orchestration later, but the habits matter now.

Where this leaves AgriTech leaders

Nvidia buying SchedMD is a reminder that AI success is less about flashy demos and more about repeatable operations. Open-source AI infrastructure—schedulers, orchestration, and model tooling—helps AgriTech teams build systems that survive real seasons, real budgets, and real customers.

If you’re responsible for AI in agriculture, here’s the stance I’d take: stop treating infrastructure as “engineering’s problem.” It’s a strategy decision. It determines whether crop monitoring stays a pilot or becomes a product, whether yield prediction is trusted or ignored, and whether precision agriculture AI pays for itself.

What would change in your operation if you could run your peak-season AI workloads with predictable turnaround times—and a predictable cost per hectare?