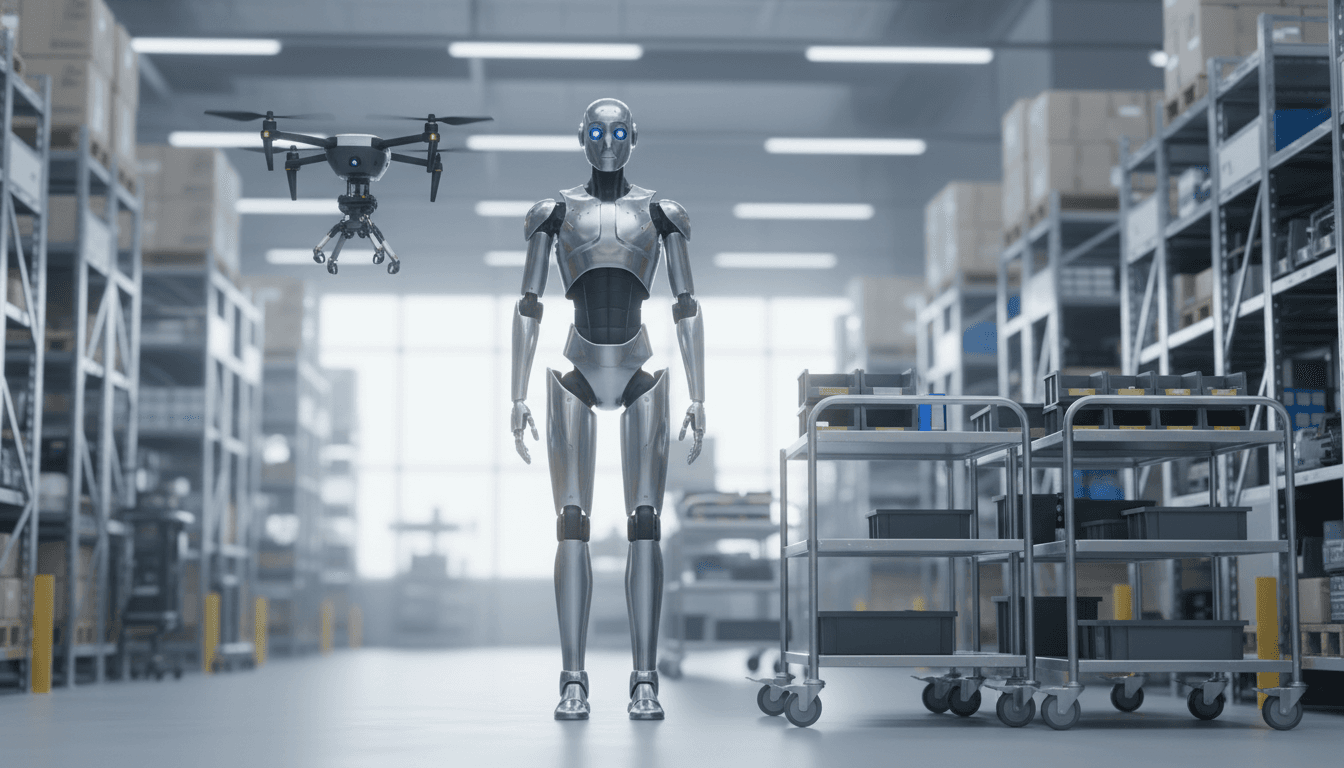

Humanoid robots at $29,900 are becoming real pilot projects. See what Unitree H2, VLA models, and lightweight arms mean for 2026 automation.

Humanoid Robots at $29,900: What Changes in 2026

A 180 cm, 70 kg humanoid robot with a list price starting at US$29,900 isn’t a science-fair novelty anymore—it’s a procurement conversation. That single price tag, attached to Unitree’s human-size H2 bionic humanoid, is the clearest signal that the humanoid market is shifting from “cool demo” to “budget line item.”

For leaders tracking AI and robotics transforming industries worldwide, the point isn’t whether every business should buy a humanoid next quarter. The point is simpler: the cost and capability curve is bending fast, and the companies that learn how to pilot, integrate, and govern robots now will be the ones who get real ROI when the hardware and AI mature another notch.

This post uses the latest “Video Friday” roundup vibe—humanoids, drones, lightweight arms, and talks on generative AI—to pull out what actually matters for operations, product teams, and innovation leaders heading into 2026.

The $29,900 humanoid is a forcing function for adoption

A humanoid robot at this price isn’t “cheap,” but it’s cheap enough to change behavior. In many organizations, $30k–$60k is the range where experimentation becomes politically easy: a department can justify a pilot without a multi-quarter capital committee process.

Here’s why that matters for real-world automation.

Humanoids are being positioned as generalists, not one-task machines

Unitree’s messaging around the H2—human-sized, “born to serve everyone safely and in a friendly way”—isn’t just marketing fluff. It’s a strategy: sell the humanoid as a general-purpose labor platform that can be reassigned as workflows change.

That promise is attractive because most automation investments fail at the handoff between “the task we automated” and “the next task that pops up.” A fixed industrial arm is excellent—until you need it to do something else, in a different place, with different objects.

Humanoids aim to solve three practical problems at once:

- Mobility in human environments (doors, narrow aisles, ramps)

- Manipulation across many object types (boxes, tools, carts)

- Compatibility with existing facilities (no full rebuild required)

Are they there yet? Not universally. But the business case starts to appear when you can use one robot across multiple “good enough” tasks instead of building five specialized systems.

The new baseline question: “What can we learn for $30k?”

Most companies get robotics procurement wrong by trying to justify a full rollout before they’ve built internal competence. At $29,900 (plus tax/shipping), the better framing is:

A humanoid pilot is a capability-building project before it’s an automation project.

If you run warehouses, hospitals, labs, retail, or facilities operations, a small humanoid pilot can produce measurable outputs even if the robot never becomes a full-time worker.

Examples of “learning ROI” you can bank within 60–90 days:

- Safety and governance: your first real robot risk assessment, incident playbooks, and human-robot interaction rules

- Workflow mapping: a hard look at which tasks are stable enough for automation

- Data readiness: understanding what sensors, labeling, and telemetry you need for closed-loop operation

- Integration muscle: networking, MDM-style device management, authentication, audit logs

That foundation pays off whether you later deploy humanoids, AMRs, cobots, or drone systems.

Natural motion is the real KPI—because it predicts uptime

One of the videos highlighted LimX Dynamics’ “Oli” executing a coordinated whole-body sequence from lying down to getting up, with 31 degrees of freedom and human-scale height.

That kind of “getting up” demo can look like showmanship. It isn’t. It’s a proxy for whether a robot can survive messy reality.

Why “stand up from the floor” matters in operations

In real facilities, robots:

- get bumped by carts

- catch a cable underfoot

- slip on dust or packaging debris

- misjudge a step

A robot that can recover from awkward states without a technician rescue is a robot that can actually deliver ROI.

A practical stance: robustness beats elegance. If you’re considering humanoids, ask vendors to demonstrate:

- recovery behaviors (getting up, rebalancing, self-righting)

- fall detection and safe shutdown

- repeatability over long runs (not one perfect take)

- maintenance intervals and the reality of part replacement

This connects directly to research themes like the IROS workshop talk on robustness and surviving failures. The industry is finally being honest: robots will fail, and the winners are the systems that fail safely, recover quickly, and produce usable diagnostics.

Small robots finally get arms—and that changes drones, inspection, and last-mile tasks

Another standout from the roundup: researchers addressed a long-standing constraint—small robots couldn’t “afford” arms because motors made them too heavy. The approach described replaces multiple motors with a single motor plus miniature electrostatic clutches, enabling a high-DOF lightweight arm that can even hitch onto a drone.

This sounds niche until you map it to industrial workflows.

The emerging pattern: mobile base + lightweight manipulator

For years, teams had to choose:

- a ground robot that moves well but can’t do much with objects, or

- a manipulator that works well but is bolted to one place

Lightweight, high-DOF arms point toward hybrid systems where mobility and manipulation are modular.

Use cases that become much more feasible when arms get lighter:

- Drone-assisted inspection with interaction: not just “look,” but “touch,” “place,” or “retrieve”

- Inventory exceptions: grabbing a misplaced item instead of flagging it for humans

- Field maintenance: turning a knob, opening a latch, placing a sensor tag

- Remote operations in hazardous environments (industrial plants, disaster response)

The real opportunity is reducing truck rolls and technician dispatch. If a robot can perform even a small subset of “hands-on” tasks during inspection, the economics shift fast.

A quick myth-bust: “Robotics is only for big factories”

It’s not. The story here is miniaturization and modularity. When arms can hitch to drones and small platforms, you can automate tasks in:

- mid-sized warehouses

- utilities and infrastructure inspection

- construction site documentation and checks

- campus facilities management

The barrier isn’t company size anymore—it’s whether you can define repeatable workflows and manage the operational risk.

Generative AI in robotics: the value is in instruction, not vibes

The roundup also included a fireside chat featuring Amazon Robotics leadership discussing how generative AI plays a role in robotics innovation, plus a GRASP/Physical Intelligence talk on scaling robot learning with vision-language-action (VLA) models.

Here’s the cleanest way to think about it:

Generative AI becomes valuable in robotics when it reduces the cost of specifying tasks and handling edge cases.

What vision-language-action models actually buy you

Traditional robot programming is brittle: you hard-code a sequence, and the world refuses to cooperate.

VLA models aim to connect:

- what the robot sees (vision)

- what you tell it (language)

- how it moves (actions)

In practice, this can reduce setup time for tasks like:

- “Pick the damaged boxes and place them in the red tote.”

- “Move items labeled ‘cold chain’ to the insulated cart.”

- “If the aisle is blocked, take the alternate route and notify me.”

Even partial success is useful. The biggest near-term win is fewer engineering hours per workflow change.

The hard part: closed-loop behavior in a chaotic physical world

Language models are comfortable with ambiguity. Robots can’t be.

If you’re piloting AI-powered robotics, evaluate systems on:

- latency (can it respond quickly enough?)

- uncertainty handling (does it ask for help at the right time?)

- policy constraints (what is it forbidden to do?)

- data strategy (how are edge cases captured and learned?)

I’m bullish on generative AI for robotics, but only when companies treat it as a control and safety engineering problem, not a “chatbot with wheels.”

A practical playbook: how to pilot a humanoid (or any AI robot) in Q1–Q2 2026

If the $29,900 humanoid has you curious, the right next move isn’t “buy one and hope.” It’s a disciplined pilot.

Step 1: Pick tasks that are boring, frequent, and measurable

Choose tasks with:

- stable environment

- clear start/end conditions

- low safety risk

- measurable throughput and quality

Good pilot candidates:

- internal deliveries (kits, supplies, samples)

- cart movement in controlled zones

- shelf scanning and exception reporting

- simple pick-and-place in a dedicated area

Avoid pilots where success depends on perfect dexterity on day one (fine wiring, delicate handling, crowded public spaces).

Step 2: Define success metrics before the robot arrives

A pilot without metrics becomes a demo.

Pick 3–5 metrics, such as:

- task completion rate (%)

- interventions per hour

- mean time to recovery (minutes)

- safety incidents and near misses

- cost per completed task vs. baseline

Step 3: Plan for failure modes (seriously)

Robots that can’t be “unstuck” quickly become expensive statues.

Have answers for:

- Who can pause/stop it?

- Who’s on-call to recover it?

- What happens if the network drops?

- Where do logs go, and who reads them?

- How do you patch/update the system safely?

Step 4: Treat integration as the product

Even if the robot is impressive, the value usually comes from integration:

- facility maps

- inventory systems

- ticketing/CMMS

- authentication and permissions

- video retention and privacy controls

This is where AI and robotics transformations become real—the robot becomes part of your operating system.

Where this is headed: industries that will feel it first

Humanoids and AI-powered robotics won’t spread evenly. They’ll show up where labor is constrained, environments are semi-structured, and tasks are repetitive but not perfectly standardized.

Expect faster adoption in:

- logistics and warehousing (cart handling, picking assistance, after-hours tasks)

- healthcare operations (materials transport, supply rooms, non-clinical support)

- manufacturing support roles (line replenishment, inspection, internal logistics)

- facilities and campuses (patrol/inspection, deliveries, basic maintenance checks)

And yes, the drone ecosystem will keep colliding with robotics—especially as lightweight arms and modular manipulators mature.

The companies that win won’t be the ones with the flashiest demo videos. They’ll be the ones that build reliable processes: safety, data capture, integrations, and continuous improvement.

If you’re tracking our Artificial Intelligence & Robotics: Transforming Industries Worldwide series, this is the thread to pull in 2026: robots are becoming purchasable, and AI is becoming instructable. That combination changes the adoption timeline.

The question for most teams isn’t “Will humanoids replace workers?” It’s “Which tasks do we want humans to stop doing first?”

If you’re considering a pilot, start small, measure honestly, and design for failures. You’ll be ready when the next price drop and capability jump arrives—because it will.