Edge AI vision boxes like Darsi Pro point to a 2026 shift: fewer integrations, faster deployment, and better fleet operations for robotics and smart transport.

Edge AI Vision Boxes: What Darsi Pro Signals for 2026

A lot of robotics teams are still building “vision systems” the hard way: a camera here, a compute module there, a time-sync workaround duct-taped in the middle, and a remote management story that only exists in a slide deck.

That’s why e-con Systems’ new Darsi Pro edge AI vision box, announced ahead of its CES 2026 debut, is worth paying attention to. It’s not just another NVIDIA Jetson-based computer. It’s a clear signal that the market is shifting toward production-ready edge AI platforms—where camera connectivity, sensor fusion, OTA updates, and industrial reliability are treated as a single product, not a pile of integration tasks.

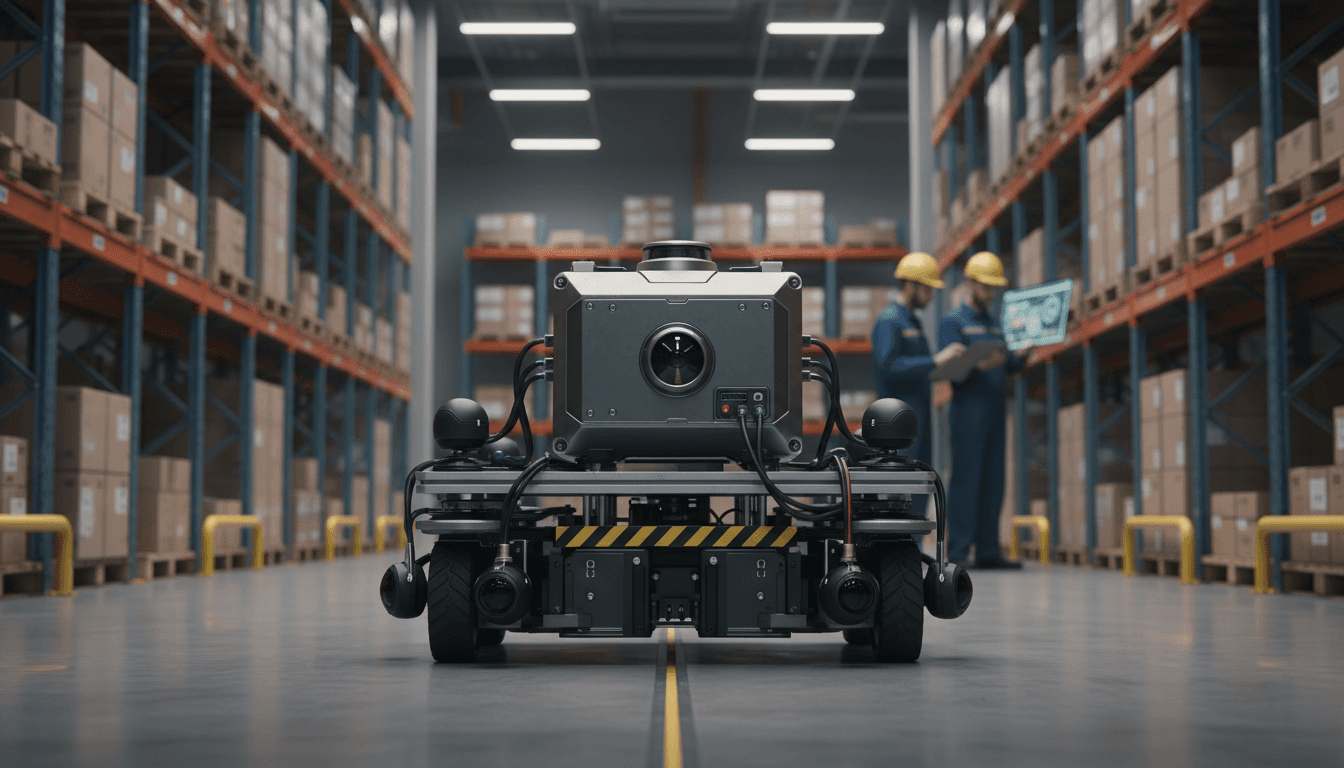

This post is part of our “Artificial Intelligence & Robotics: Transforming Industries Worldwide” series, and Darsi Pro fits the theme perfectly: it’s the kind of hardware-plus-software packaging that’s making AI-powered robotics show up faster in warehouses, factories, and smart city infrastructure.

Darsi Pro in plain terms: it’s a “vision + compute appliance”

Darsi Pro’s big idea is simple: turn the messy edge AI deployment cycle into something closer to an appliance purchase. Instead of sourcing cameras, validating carrier boards, wrestling with time synchronization, and bolting on a device management tool later, you start with a rugged box designed to run perception workloads where they happen.

From the announcement details, Darsi Pro is built on NVIDIA Jetson and is positioned for physical AI use cases (robots and machines that have to perceive and act in real time). e-con Systems highlights performance up to 100 TOPS (trillions of operations per second), plus variants supporting multi-camera GMSL connectivity (up to eight synchronized cameras).

The practical takeaway: edge AI is moving from “developer kit culture” to production platform culture.

Why that matters right now (late 2025 heading into 2026)

January is when robotics roadmaps get real. CES demos turn into Q1 pilot programs, and Q1 pilots turn into “we need it in production by summer.” The teams that win those timelines are the ones who reduce integration risk.

A vision box that combines compute, sensor IO, synchronization, and fleet management isn’t glamorous. It’s just how you ship robots faster.

The real bottleneck in robotics isn’t AI models—it’s integration

Most companies obsess over model accuracy, then lose months to system-level problems:

- Time alignment between camera frames, lidar points, IMU readings, and wheel odometry

- Multi-camera calibration and consistent image quality across environments

- Bandwidth and cabling headaches in mobile platforms

- Field updates that don’t brick devices or break compliance

- Thermal and environmental failures that only show up after weeks on-site

Darsi Pro is designed to address that reality head-on.

e-con Systems calls out Precision Time Protocol (PTP) for synchronization, plus support for cameras, lidar, radar, and IMUs. That’s a big deal because sensor fusion quality is usually determined by timing discipline more than it’s determined by fancy neural networks. If your inputs aren’t synchronized, your “AI” becomes a very confident way to be wrong.

A useful rule of thumb: if your robot can’t trust timestamps, it can’t trust perception.

What “100 TOPS at the edge” actually enables in robotics

“TOPS” can sound like marketing noise, so here’s what it tends to translate to in real deployments: more perception tasks running simultaneously, at higher frame rates, with lower latency.

With an edge AI vision box in the 100 TOPS range, teams typically aim to run combinations like:

- Real-time object detection + tracking

- Multi-camera surround perception (front/side/rear)

- Depth estimation or stereo pipelines

- Segmentation for drivable space / free space

- Visual localization assistance (in addition to lidar SLAM)

- On-device analytics (counts, dwell time, anomalies)

Why edge compute beats cloud for robots that move

For robotics and intelligent transportation systems, cloud-only perception fails for predictable reasons:

- Latency: decisions often need to happen in tens of milliseconds.

- Connectivity: warehouses, loading docks, and streets aren’t perfectly connected.

- Cost: streaming multiple high-res camera feeds burns bandwidth fast.

- Privacy: many deployments can’t ship raw video off-site.

Edge AI computing keeps decision loops local while still allowing cloud-based fleet operations. Darsi Pro’s pairing of edge compute with cloud device management is the right split: act locally, manage centrally.

The feature set to scrutinize (and why it’s more than a checklist)

Edge AI platforms live or die by the unsexy details. Here’s how to read the Darsi Pro feature list like an operator, not a brochure reader.

Extensive camera compatibility = fewer redesigns

If you’ve ever had to switch camera modules mid-project due to availability, compliance requirements, or performance issues in low light, you know the pain. e-con Systems’ camera portfolio and stated compatibility matter because it reduces the chance you’ll be forced into a board redesign.

For teams building AMRs or delivery robots, camera supply continuity is a risk category on its own.

Multi-sensor fusion with PTP = the foundation for reliable autonomy

Synchronizing up to eight cameras (in the GMSL variant) while also fusing lidar/radar/IMU inputs is the architecture you want for:

- High-confidence obstacle detection

- Better motion estimation in feature-poor areas (glossy floors, long corridors)

- Improved performance in low light or glare (where lidar or radar can stabilize perception)

This matters because the future of physical AI is not “one perfect sensor.” It’s redundancy and fusion.

Flexible connectivity = faster vehicle integration

Dual GbE with PoE, USB 3.2, HDMI, CAN, GPIO, wireless modules—these aren’t luxury ports. They’re how you avoid a custom harness and weeks of electrical validation.

For mobility platforms, CAN support in particular tends to simplify integration with vehicle subsystems and safety controllers.

Cloud management and OTA updates = fleet reality

The shift from “robot prototype” to “robot fleet” happens the moment you need to:

- patch security issues

- roll out model updates

- change camera parameters

- monitor device health

Darsi Pro’s cloud management via CloVis Central (as described in the announcement) aligns with what fleet operators demand: secure OTA, remote configuration, and health monitoring.

I’m opinionated here: if your robotics platform doesn’t have a serious OTA strategy, you don’t have a product—you have a demo that will age badly.

Industrial reliability = the hidden ROI

A rugged enclosure and wide operating temperature range aren’t just for harsh factories. They matter in “normal” environments too:

- warehouses with cold zones

- loading docks with humidity swings

- sunlight exposure in last-mile delivery

- vibration from uneven flooring

Reliability is what protects your unit economics. Every truck roll to reboot a device is a tax on your business model.

Where Darsi Pro fits: logistics, AMRs, and smart transportation

e-con Systems explicitly positions Darsi Pro for autonomous mobile robots, delivery robots, warehouse systems, and intelligent transportation systems—and those are exactly the industries seeing the most practical value from edge AI vision.

Warehouse robotics: navigation + analytics in the same box

Warehouse operators increasingly want robots that do two jobs:

- Move safely and efficiently (navigation, obstacle avoidance)

- Capture operational intelligence (inventory visibility, shelf conditions, exceptions)

A vision system that supports multi-camera input and can fuse IMU/lidar data makes it easier to build robots that navigate robustly while also running analytics in parallel.

This is one of the most visible ways AI and robotics are transforming industries worldwide: the robot isn’t only labor automation—it’s a roaming sensor network.

Intelligent transportation systems (ITS): local decisions, centralized governance

The announcement mentions demos like automated license plate recognition (ALPR). Whether you’re building smart intersections, gated access, or traffic flow monitoring, edge AI makes sense when:

- you need instant responses

- you want to avoid constant video streaming

- you need predictable operating costs

Edge AI vision boxes are increasingly the “standard form factor” for ITS deployments, especially when paired with remote device management.

Low-light performance: where pilots often fail

e-con Systems also highlights ultra-low-light, high-resolution camera integrations. That’s not a niche feature. Many pilots collapse at the same moment: nighttime operations.

If you’re evaluating an edge AI vision box for robotics, test these conditions early:

- glare from high-bay LEDs

- reflective shrink wrap

- shadows and mixed lighting

- motion blur during turns

Hardware that ships with proven imaging expertise (ISP tuning, consistent camera pipeline support) can save months of perception debugging.

A practical buyer’s checklist for edge AI vision platforms

If you’re considering Darsi Pro—or any Jetson-based edge AI vision box—use this shortlist before you commit.

- Sensor synchronization: Is PTP supported end-to-end? Can you validate timestamps at the application layer?

- Multi-camera scalability: How many cameras can you run at your target resolution and frame rate without thermal throttling?

- Camera pipeline maturity: Are ISP settings stable across lighting changes? Can you reproduce image quality across batches?

- Connectivity realism: Do you actually need GMSL, PoE, or both? What’s your cabling plan on a moving robot?

- OTA safety: Can you roll back updates? Can you stage updates by cohorts? What happens if an update fails mid-write?

- Fleet observability: Do you get CPU/GPU utilization, temperature, storage health, camera link status, and error logs remotely?

- Regulatory and security posture: What does secure boot, key management, and vulnerability patching look like in practice?

This is how you protect timelines. Most teams regret the platform decision only after the first field deployment.

What this launch tells us about 2026: “single-vendor stacks” are winning

e-con Systems frames Darsi Pro as a move from camera supplier to “vision and compute platform partner,” aiming to be a single trusted vendor for compute modules, carrier boards, cameras, and software frameworks.

That’s not just positioning—it’s following the demand curve.

As robotics adoption accelerates globally, buyers are less interested in assembling a parts list and more interested in reducing integration surfaces:

- fewer vendors to coordinate

- fewer compatibility surprises

- clearer support paths

- faster path from pilot to production

Edge AI vision boxes are becoming the standard building block for physical AI because they bring structure to chaos.

If you’re planning robotics deployments in 2026, the question isn’t “Can we run AI on the edge?” You already can. The better question is: Can we operate, update, and scale it reliably across dozens—or thousands—of devices?

Next step

If you’re evaluating edge AI vision for AMRs, warehouse automation, or intelligent transportation systems, map your requirements (camera count, sensors, OTA, temperature range, remote monitoring) before you compare products. The spec sheet is the easy part; operations is where platforms earn their keep.

What’s the one integration issue that has slowed your robotics program the most—camera IO, sensor fusion timing, or fleet management? That answer usually points directly to the platform you should standardize on.